expiredj2ue posted Jul 30, 2021 09:31 AM

Item 1 of 5

Item 1 of 5

expiredj2ue posted Jul 30, 2021 09:31 AM

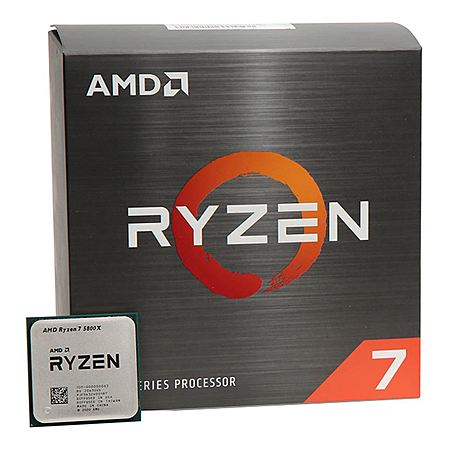

AMD Ryzen 7 5800X 3.8 GHz Eight-Core AM4 Processor

+ Free Store Pickup$360

$450

20% offMicro Center

Visit Micro CenterGood Deal

Bad Deal

Save

Share

Leave a Comment

Top Comments

117 Comments

Sign up for a Slickdeals account to remove this ad.

If you're doing 4k gaming on a card that can legit handle 4k, your CPU is virtually irrelevant for most games.

You'd barely notice running the highest end 2021 CPU available versus an i7 from 2013.

If you're running CPU-bound lower res stuff, then you'll notice a fair bit more difference.

https://cdn.mos.cms.fut

8% fps difference on a 3080 at 4k/Ultra across 9 different games between 10th gen i9s and a 4th gen (2013) i7.

0.2 fps difference between an i3 10th gen and a Ryzen 3600, another 0.3 difference from the 3600 to a 3900X.

Basically no difference.... and you'll get essentially no improvement from a CPU upgrade if your CPU is like anything made in the last 5 years.

Same 9 games at 1080p/medium on same 3080 and same CPUs-

https://cdn.mos.cms.fut

80 fps difference from the 2013 i7 to the 10th gen i9. 12 fps bump from the i3 to the Ryzen 3600, another 14 fps from the 3600 to the 3900x.

And obviously for productivity work the difference can be pretty significant.

The real benefit is that you can accomplish the same speeds with half the lanes, so the limited PCIE lanes can be split for other devices, or even using a specialized card to turn one PCIE4 into multiple PCIE3 slots, without a bottleneck on the device.

CPs biggest problems were on the last-gen consoles.

I played on a Ryzen 3600 and an OCed 3090-In 4k/Ultra with ray tracing.... played every bit of content in the game, north of 120 hours.

One side quest was bugged and unable to start. Everything else worked fine.

FWIW- did the same with RDR2 on that setup.

Borderlands 3 I played at 4/ultra on m previous system- an i7-4770k with an OCed 1080ti.

Just about nothing is CPU bound at 4k so the CPU doesn't matter significantly.

At 1080p, with a newer GPU, a LOT of stuff will be CPU bound and you will get significantly higher frame rates.

In your case, the RX580 isn't a super powerful card- you remained GPU bound.

To illustrate this:

https://www.pcgamesn.co

At Ultra 1080p on BL3, even an RX590 can't hit 60 fps...46 fps average, 35 for lows.

It's held back by the weak GPU- and you need to drop down to medium settings at 1080 unless you're ok with console-like frame rates.

https://www.dsogaming.c

Here they also do both GPU and CPU testing.

The RX580 again is a console-like low-mid 30 fps at 1080p/ultra... even throwing a high end CPU at it- because the GPU is so restrictive here.... upgrading the CPU wouldn't help since the GPU is already being asked to do too much

But they ALSO test the other way. They put a 2080TI in there, so that the game isn't GPU bound at all at 1080p. Then test different CPUs.

Now changing the CPU SIGNIFICANTLY improves performance...

Jumping from 47 fps min, 75 fps average up to 90 fps min and 115 fps average between the slowest and fastest CPUs once you remove GPU as a restriction.

Yup- and honestly this isn't really noticeable NOW... but it might get more significant as direct storage becomes a real thing on PCs.

Xbox Boss Admits That Ray Tracing Might Be Overrated

https://www.thefpsrevie

I was just pointing out your claims don't hold up to actual measured results.

The reason an old CPU was "fine" for you was not because any recent CPU is always fine.... the reason the OLD CPU was "fine" is because you were running a GPU that was over 4 years old, not top of the line even then, and making your gaming experience heavily GPU bound.

As the source I provided shows, if you'd had a better GPU, then bumping your CPU would have offered much better performance.

Of course if you're someone who doesn't care if they're running low res and low settings at low frame rate- run it on a potato, you'll be fine.

But then why even game on a PC at that point? A console will get you mediocre graphics and frame-rates a lot cheaper, and you could've flipped your video card for 2x the cost of a new console during the squeeze.

Xbox Boss Admits That Ray Tracing Might Be Overrated

https://www.thefpsrevie

Same deal here.

The HW in the Xbox is deeply inadequate for this task especially at higher resolutions- even moreso in that it can't support DLSS either, so of course the head Xbox guy is gonna downplay a feature his system sucks at.

Sign up for a Slickdeals account to remove this ad.

Leave a Comment