expiredphoinix | Staff posted Jun 16, 2025 07:45 AM

Item 1 of 4

Item 1 of 4

expiredphoinix | Staff posted Jun 16, 2025 07:45 AM

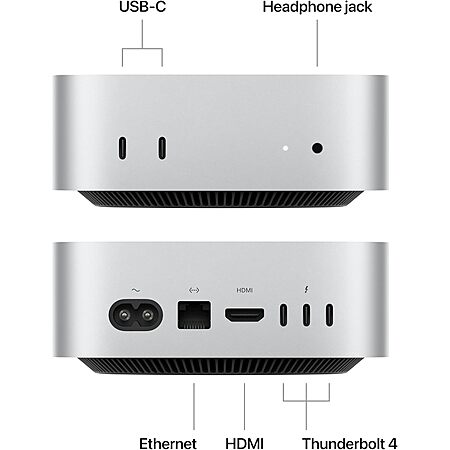

Apple Mac Mini (2024): M4 10-Core CPU / GPU, 24GB Memory, 512GB SSD

+ Free Shipping$849

$999

15% offAmazon

Visit AmazonGood Deal

Bad Deal

Save

Share

Leave a Comment

Top Comments

Apple has a direct history of not only having less ram in products but having apps run smoother and their SoCs benchmark BETTER than many of the QCA chip stacks they go up against all while being more power efficient.

having memory on die probably changes a lot of performance parameters and acts like a very high speed cache. You should compare benchmarks instead of focusing solely on specs. Because the specs don't compare how you think they do,

An upcharge of $400 for an additional 8GB RAM and a 256GB larger SSD doesn't feel slick. But if you need the extra RAM then this is the only option as I've read the RAM is integrated directly into the Apple M4 chip and soldered to the logic board.

44 Comments

Sign up for a Slickdeals account to remove this ad.

Apple has a direct history of not only having less ram in products but having apps run smoother and their SoCs benchmark BETTER than many of the QCA chip stacks they go up against all while being more power efficient.

having memory on die probably changes a lot of performance parameters and acts like a very high speed cache. You should compare benchmarks instead of focusing solely on specs. Because the specs don't compare how you think they do,

https://buyrouterswitch

That is the key thing that enables them to hit higher benchmarks with less memory.

So its not just memory bandwidth (although that is part of it), it is bandwidth + the entire architecture of how the CPU/GPU are all tightly integrated into the same memory pool.

The whole point / advantage of memory on die is very low latency and very high bandwidth. You are likely correct its not actually part of the same silicon. But I guess the point I was getting at is they are achieving the same via their UMA design and implementation.

https://buyrouterswitch

That is the key thing that enables them to hit higher benchmarks with less memory.

So its not just memory bandwidth (although that is part of it), it is bandwidth + the entire architecture of how the CPU/GPU are all tightly integrated into the same memory pool.

The whole point / advantage of memory on die is very low latency and very high bandwidth. You are likely correct its not actually part of the same silicon. But I guess the point I was getting at is they are achieving the same via their UMA design and implementation.

All of the benefits of "unified memory" as described in that article have existed in basic form for literally decades (e.g. on any Intel/AMD cpu with integrated graphics). Apple was the first to increase the bandwidth to far exceed standard PC DIMM setups, and pair it with a faster GPU. But now, as I mentioned, x86 chips are doing the same thing.

well i'm glad at least you admit that. that's the core of the special sauce when its part of UMA.

> All of the benefits of "unified memory" as described in that article have existed in basic form for literally decades (e.g. on any Intel/AMD cpu with integrated graphics).

Integrated graphics are known as notoriously horrible in the PC gaming community. Not the best choice to highlight the power of unified memory. The benchmarks speak for themselves.

Sign up for a Slickdeals account to remove this ad.

Also, that huge PITA process is a couple screws and any one that has the slightest bit of skill could do it.

Leave a Comment